Abstract

Multimodal large language models (MLLMs) play a pivotal role in advancing the quest for general artificial intelligence. However, achieving unified target for multimodal understanding and generation remains challenging due to optimization conflicts and performance trade-offs. To effectively enhance generative performance while preserving existing comprehension capabilities, we introduce STAR: a STacked AutoRegressive scheme for task-progressive unified multimodal learning. This approach decomposes multimodal learning into multiple stages: understanding, generation, and editing. By freezing the parameters of the fundamental autoregressive (AR) model and progressively stacking isomorphic AR modules, it avoids cross-task interference while expanding the model's capabilities. Concurrently, we introduce a high-capacity VQ to enhance the granularity of image representations and employ an implicit reasoning mechanism to improve generation quality under complex conditions. Experiments demonstrate that STAR achieves state-of-the-art performance on GenEval (0.91), DPG-Bench (87.44), and ImgEdit (4.34), validating its efficacy for unified multimodal learning.

Understanding

Q: Please describe the content of this image.

A: The image depicts a charming scene of a golden retriever puppy and an orange tabby kitten walking together on a grassy path. The background is filled with vibrant orange flowers, likely lilies, creating a picturesque and serene setting. The sunlight filters through the foliage, casting a warm glow over the entire scene, enhancing the natural beauty and tranquility of the moment. The animals appear to be enjoying their time outdoors, adding a sense of companionship and joy to the image.

Q: Tell me what text is in the picture.

A: The text in the picture reads: ```STAR understand text - to - image editing```

Q: Where is the location shown in the picture.

A: The image shows a section of the Great Wall of China, specifically the Mutianyu section. The Great Wall is an ancient fortification that stretches across northern China and is one of the most recognizable landmarks in the world. The Mutianyu section is located in the Beijing region and is known for its well-preserved sections and scenic views.

Generation

A whimsical scene featuring a small elf with pointed ears and a green hat, sipping orange juice through a long straw from a disproportionately large orange. Next to the elf, a curious squirrel perches on its hind legs, while an owl with wide, observant eyes watches intently from a branch overhead. The orange's vibrant color contrasts with the muted browns and greens of the surrounding forest foliage.

An intricately detailed character drawing by Bastien Lecouffe Deharme featuring an elderly gentleman with long, flowing grey hair. He possesses majestic, large grey wings that extend from his back, conveying both wisdom and strength. The refinement of the character is further accentuated by a polished monocle nestled over one keen eye, and he is dressed in sophisticated attire that hints at a bygone era of elegance.

On the soft, warm sand of the beach, a fluffy white rabbit with rounded ears is caught in a curious moment, gently placing its paw on the ribbed surface of a pink scallop shell. The scallop, slightly open, reveals its smooth interior contrasting with its coarse outer texture, while hues of pink and orange from the setting sun reflect off its surface. There's a tranquil ocean backdrop with the gentle ebbing of the tide, and the fading daylight casts a golden glow over the scene, highlighting the rabbit's soft fur and the shell's subtle color.

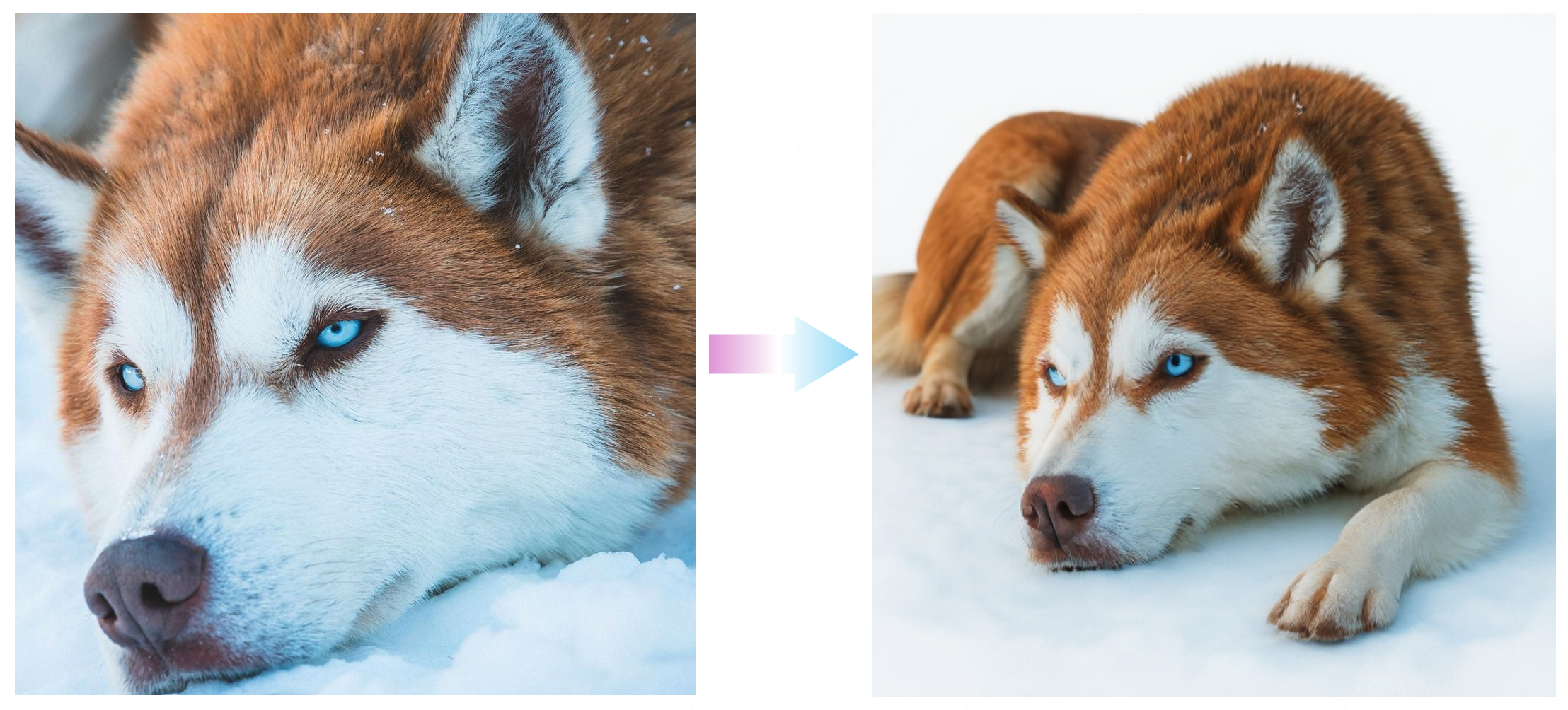

Editing

Remove the tiger in the water.

Add a vintage car driving along the dirt path in the foreground of the image.

Extract the animal in the image, including its full body and fur details.

Knowledge Reasoning

The weapon of Apollo in Greek mythology.

The object used to find the heroine in Cinderella.

The fastest land animal.

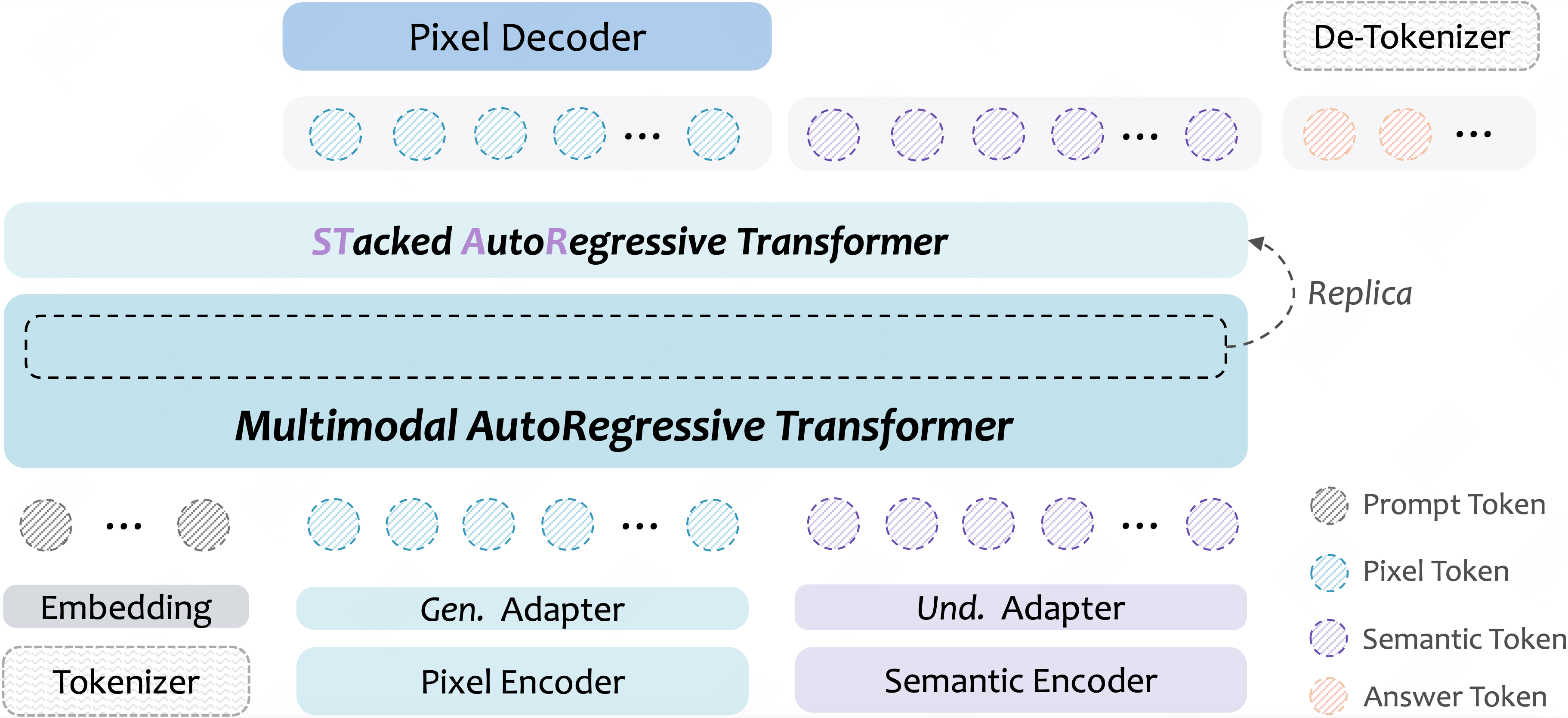

Framework

The overall architecture of STAR. The architecture integrates two visual encoder (pixel and semantic), a multimodal autoregressive transformer, a stacked autoregressive transformer, and a pixel decoder. The stacked AR is replicated from the last N layer of the multimodal AR.

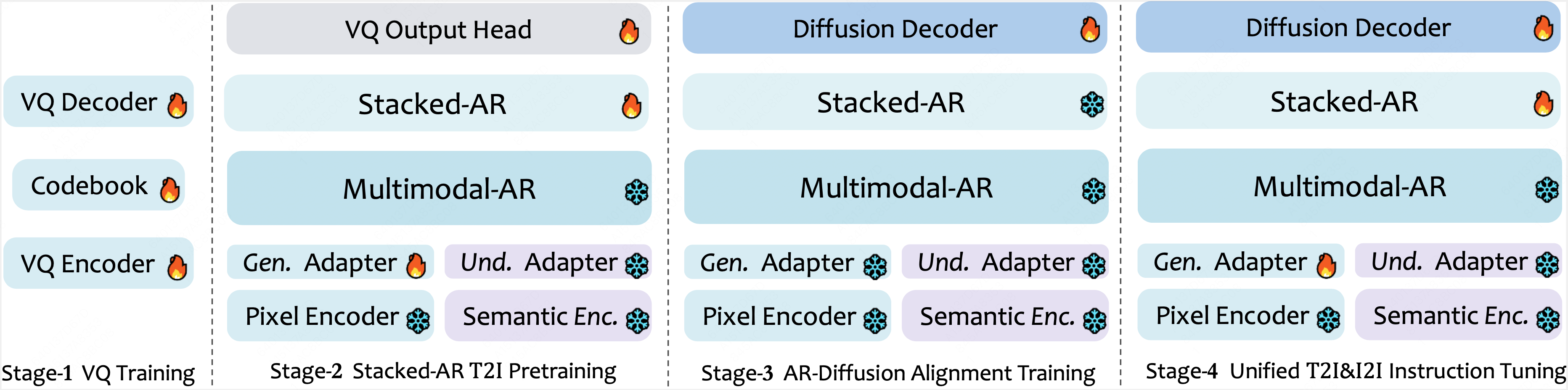

Training Stages

The training stages of STAR comprise four task-progressive phases that successively expand capability while preserving all previously acquired skills.

Quantitative Results

Visual Understanding

| Model | #LLM | MMB | MMStar | MathVista | SEED | MME-P | MMMU | OCRBench | POPE | DocVQA |

|---|---|---|---|---|---|---|---|---|---|---|

| Seed-X | 13B | 70.1 | - | - | 66.5 | 1457.0 | 35.6 | - | - | - |

| EMU3 | 8B | 58.5 | - | - | 68.2 | 1243.8 | 31.6 | 68.7 | 85.2 | - |

| MetaMorph | 8B | 75.2 | - | - | 71.8 | - | 41.8 | - | - | - |

| Janus | 1.3B | 75.5 | - | - | 63.7 | 1338.0 | 30.5 | - | 87.0 | - |

| Janus-Pro | 7B | 79.2 | 87.4 | - | 72.1 | 1567.1 | 41.0 | - | - | - |

| BLIP3-o | 8B | 83.5 | - | - | 77.5 | 1682.6 | 50.6 | - | - | - |

| Show-o2 | 7B | 79.3 | 56.6 | - | 69.8 | 1620.0 | 48.9 | - | - | - |

| MetaQuery-XL | 7B | 83.5 | - | - | 76.9 | 1685.2 | 58.6 | - | - | - |

| Bagel | 14B | 85.0 | - | 73.1 | - | 1687.0 | 55.3 | - | - | - |

| Ovis-U1 | 1.5B | 77.8 | - | 69.4 | - | - | 51.1 | 88.3 | - | - |

| ILLUME+ | 3B | 80.8 | - | - | 73.3 | 1414.0 | 44.3 | 67.2 | 87.6 | 80.8 |

| X-Omni | 7B | 74.8 | - | - | 74.1 | - | - | 70.4 | 89.3 | 88.6 |

| STAR-3B | 3B | 80.1 | 55.8 | 62.3 | 74.0 | 1592.3 | 53.1 | 79.7 | 85.9 | 93.9 |

| STAR-7B | 7B | 83.9 | 63.9 | 68.1 | 77.0 | 1690.1 | 58.6 | 86.4 | 86.6 | 95.7 |

Text-to-Image Generation

GenEval

| Model | Single | Two | Counting | Colors | Position | Color Attributes | Overall |

|---|---|---|---|---|---|---|---|

| Generation-Only Models | |||||||

| SDXL | 0.98 | 0.74 | 0.39 | 0.85 | 0.15 | 0.23 | 0.55 |

| DALL-E | 0.96 | 0.87 | 0.47 | 0.83 | 0.43 | 0.45 | 0.67 |

| SD3-medium | 0.99 | 0.94 | 0.72 | 0.89 | 0.33 | 0.60 | 0.74 |

| FLUX.1-dev | 0.98 | 0.93 | 0.75 | 0.93 | 0.68 | 0.65 | 0.82 |

| OmniGen2 | 0.99 | 0.96 | 0.74 | 0.98 | 0.72 | 0.75 | 0.86 |

| Unified Models | |||||||

| Emu3 | 0.99 | 0.81 | 0.42 | 0.80 | 0.49 | 0.45 | 0.66 |

| ILLUME+ | 0.99 | 0.88 | 0.62 | 0.84 | 0.42 | 0.53 | 0.72 |

| Janus-Pro | 0.99 | 0.89 | 0.59 | 0.90 | 0.79 | 0.66 | 0.80 |

| MetaQuery | - | - | - | - | - | - | 0.80 |

| BLIP3-o | - | - | - | - | - | - | 0.84 |

| UniWorld-V1 | 0.99 | 0.93 | 0.81 | 0.89 | 0.74 | 0.71 | 0.84 |

| Mogao | 1.00 | 0.97 | 0.83 | 0.93 | 0.84 | 0.80 | 0.89 |

| BAGEL | 0.98 | 0.95 | 0.84 | 0.95 | 0.78 | 0.77 | 0.88 |

| Show-o2 | 1.00 | 0.87 | 0.58 | 0.92 | 0.52 | 0.62 | 0.76 |

| GPT-4o | 0.99 | 0.92 | 0.85 | 0.92 | 0.75 | 0.61 | 0.84 |

| X-Omni | 0.98 | 0.95 | 0.75 | 0.91 | 0.71 | 0.68 | 0.83 |

| Ovis-U1 | 0.98 | 0.98 | 0.90 | 0.92 | 0.79 | 0.75 | 0.89 |

| STAR-3B | 0.98 | 0.87 | 0.85 | 0.91 | 0.79 | 0.76 | 0.86 |

| STAR-7B | 0.98 | 0.94 | 0.90 | 0.92 | 0.91 | 0.80 | 0.91 |

DPG-Bench

| Model | Global | Entity | Attributes | Relation | Other | Overall |

|---|---|---|---|---|---|---|

| Generation-Only Models | ||||||

| SDXL | 83.27 | 82.43 | 80.91 | 86.76 | 80.41 | 74.65 |

| DALL-E | 90.97 | 89.61 | 88.39 | 90.58 | 89.83 | 83.50 |

| SD3-medium | 87.90 | 91.01 | 88.83 | 80.70 | 88.68 | 84.08 |

| FLUX.1-dev | 82.10 | 89.50 | 88.70 | 91.10 | 89.40 | 84.00 |

| OmniGen2 | 88.81 | 88.83 | 90.18 | 89.37 | 90.27 | 83.57 |

| Unified Models | ||||||

| Emu3 | 85.21 | 86.68 | 86.84 | 90.22 | 83.15 | 80.60 |

| Janus-Pro | 86.90 | 88.90 | 89.40 | 89.32 | 89.48 | 84.19 |

| MetaQuery | - | - | - | - | - | 82.05 |

| BLIP3-o | - | - | - | - | - | 81.60 |

| UniWorld-V1 | 83.64 | 88.39 | 88.44 | 89.27 | 87.22 | 81.38 |

| Mogao | 82.37 | 90.03 | 88.26 | 93.18 | 85.40 | 84.33 |

| BAGEL | 88.94 | 90.37 | 91.29 | 90.82 | 88.67 | 85.07 |

| Show-o2 | 89.00 | 91.78 | 89.96 | 91.81 | 91.64 | 86.14 |

| GPT-4o | 82.27 | 91.27 | 87.67 | 93.85 | 88.71 | 86.23 |

| X-Omni | 84.80 | 92.59 | 90.63 | 94.75 | 84.20 | 87.65 |

| Ovis-U1 | 82.37 | 90.08 | 88.68 | 93.35 | 85.20 | 83.72 |

| STAR-3B | 93.00 | 90.49 | 91.71 | 90.72 | 92.75 | 87.30 |

| STAR-7B | 94.97 | 92.91 | 91.62 | 94.30 | 83.82 | 87.44 |

WISE (World Knowledge Reasoning)

| Model | Cultural | Time | Space | Biology | Physics | Chemistry | Overall |

|---|---|---|---|---|---|---|---|

| Generation-Only Models | |||||||

| SD-XL | 0.43 | 0.48 | 0.47 | 0.44 | 0.45 | 0.27 | 0.43 |

| SD-3.5-large | 0.44 | 0.50 | 0.58 | 0.44 | 0.52 | 0.31 | 0.46 |

| FLUX.1-dev | 0.48 | 0.58 | 0.62 | 0.42 | 0.51 | 0.35 | 0.50 |

| Unified Models | |||||||

| Emu3 | 0.34 | 0.45 | 0.48 | 0.41 | 0.45 | 0.27 | 0.39 |

| Janus-Pro-7B | 0.30 | 0.37 | 0.49 | 0.36 | 0.42 | 0.26 | 0.35 |

| MetaQuery-XL | 0.56 | 0.55 | 0.62 | 0.49 | 0.63 | 0.41 | 0.55 |

| BLIP3-o | - | - | - | - | - | - | 0.62 |

| BAGEL | 0.76 | 0.69 | 0.75 | 0.65 | 0.75 | 0.58 | 0.70 |

| GPT-4o | 0.94 | 0.64 | 0.98 | 0.93 | 0.98 | 0.95 | 0.89 |

| STAR-3B | 0.58 | 0.54 | 0.48 | 0.49 | 0.51 | 0.54 | 0.52 |

| STAR-7B | 0.61 | 0.67 | 0.61 | 0.74 | 0.69 | 0.66 | 0.66 |

Image Editing

MagicBrush

| Model | L1 ↓ | CLIP-I ↑ | DINO ↑ |

|---|---|---|---|

| MagicBrush | 0.074 | 0.908 | 0.847 |

| Instruct-Pix2Pix | 0.114 | 0.851 | 0.744 |

| UltraEdit | 0.066 | 0.904 | 0.852 |

| ICEdit | 0.060 | 0.928 | 0.853 |

| OmniGen | 0.116 | 0.863 | 0.821 |

| UniReal | 0.081 | 0.903 | 0.837 |

| BAGEL | 0.074 | 0.914 | 0.827 |

| STAR-3B | 0.056 | 0.934 | 0.857 |

| STAR-7B | 0.060 | 0.931 | 0.853 |

ImgEdit-Bench

| Model | Add | Adjust | Extract | Replace | Remove | Background | Style | Hybrid | Action | Overall |

|---|---|---|---|---|---|---|---|---|---|---|

| Editing-Only Models | ||||||||||

| MagicBrush | 2.84 | 1.58 | 1.51 | 1.97 | 1.58 | 1.75 | 2.38 | 1.62 | 1.22 | 1.90 |

| Instruct-Pix2Pix | 2.45 | 1.83 | 1.44 | 2.01 | 1.50 | 1.44 | 3.55 | 1.20 | 1.46 | 1.88 |

| AnyEdit | 3.18 | 2.95 | 1.88 | 2.47 | 2.23 | 2.24 | 2.85 | 1.56 | 2.65 | 2.45 |

| UltraEdit | 3.44 | 2.81 | 2.13 | 2.96 | 1.45 | 2.83 | 3.76 | 1.91 | 2.98 | 2.70 |

| Step1X-Edit | 3.88 | 3.14 | 1.76 | 3.40 | 2.41 | 3.16 | 4.63 | 2.64 | 2.52 | 3.06 |

| ICEdit | 3.58 | 3.39 | 1.73 | 3.15 | 2.93 | 3.08 | 3.84 | 2.04 | 3.68 | 3.05 |

| Unified Models | ||||||||||

| GPT-4o | 4.61 | 4.33 | 2.90 | 4.35 | 3.66 | 4.57 | 4.93 | 3.96 | 4.89 | 4.20 |

| OmniGen | 3.47 | 3.04 | 1.71 | 2.94 | 2.43 | 3.21 | 4.19 | 2.24 | 3.38 | 2.96 |

| BAGEL | 3.56 | 3.31 | 1.70 | 3.30 | 2.62 | 3.24 | 4.49 | 2.38 | 4.17 | 3.20 |

| UniWorld-V1 | 3.82 | 3.64 | 2.27 | 3.47 | 3.24 | 2.99 | 4.21 | 2.96 | 2.74 | 3.26 |

| OmniGen2 | 3.57 | 3.06 | 1.77 | 3.74 | 3.20 | 3.57 | 4.81 | 2.52 | 4.68 | 3.44 |

| Ovis-U1 | 4.13 | 3.62 | 2.98 | 4.45 | 4.06 | 4.22 | 4.69 | 3.45 | 4.61 | 4.00 |

| STAR-3B | 4.26 | 4.06 | 3.78 | 4.46 | 4.34 | 4.19 | 4.53 | 3.29 | 4.38 | 4.14 |

| STAR-7B | 4.33 | 4.19 | 4.19 | 4.59 | 4.58 | 4.36 | 4.59 | 3.67 | 4.60 | 4.34 |

BibTeX

@article{qin2025star,

title={STAR: STacked AutoRegressive Scheme for Unified Multimodal Learning},

author={Qin, Jie and Huang, Jiancheng and Qiao, Limeng and Ma, Lin},

journal={arXiv preprint arXiv:2512.13752},

year={2025}

}